Implementation Of Artificial Neural Network For Xor Logic Gate With 2

Content

- Lynda Com Is Now Linkedin Learning

- Backward Propagation

- Why Do Neurons Make Networks

- Explanation Of Maths Behind Neural Network:

- Neural Network For Xor

Also, the results you report sound like perhaps you don’t actually have the hidden layer. E.g. if you get lucky with initial conditions on a non-hidden layer network, you could occasionally end up with a correct solution even though most of the time you would see 0.5. A novel class of information-processing systems called cellular neural networks is proposed. Like neural networks, they are large-scale nonlinear analog circuits that process signals in real time.

- TensorFlow is an open-source machine learning library designed by Google to meet its need for systems capable of building and training neural networks and has an Apache 2.0 license.

- One of the most popular libraries is numpy which makes working with arrays a joy.

- The input is arranged as a matrix where rows represent examples and column represent features.

- If we change weights on the next step of gradient descent methods, we will minimize the difference between output on the neurons and training set of the vector.

- Mar 21, 2019 the neural network model to solve the xor logic from.

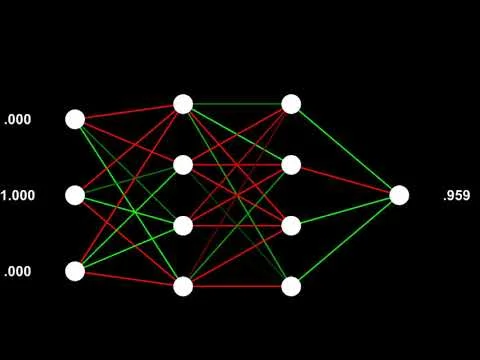

- As shown in the figure below, going from a 2-bit to a 3-bit problem requires a third layer of neurons.

The graph looks different if you look at a different weight, but the point is, this is not a nice cost surface. If our initial weight lands somewhere left of 6, then we’ll probably be able to gradient descent down to the minimum, but if it lands to the right, we’ll probably get stuck in that local minimum. Now imagine all 29 weights in our network having a cost surface like this and you can see how it gets ugly. The take-away here is that RNNs have a lot of local optima that make it really difficult to train with the typical methods we use in feedforward networks. Ideally, we want a cost function that is smooth and convex. This result holds for a wide range of activation functions, e.g. for the sigmoidal functions. Build a simple model with few FullyConnected or Dense Layers.

Here and prior to entering comparative numerical studies, let us analyze the computational complexity. Regarding the proposed learning algorithm, the algorithm adjusts weights for each learning sample, where is number of input attributes. In contrast, computational time is for MLP, where is number of hidden neurons . Additionally, GDBP MLP compared here is based on derivative computations which impose high complexity, while the proposed method is derivative free. So, the proposed method has lower computational complexity and higher efficiency with respect to the MLP. This improved computing efficiency can be important for online predictions, especially when the time interval of observations is small. For the activation functions, let us try and use the sigmoid function for the hidden layer.

Lynda Com Is Now Linkedin Learning

Their paper gave birth to the Exclusive-OR(X-OR) problem. Every training iteration, we temporarily save the hidden layer outputs in `hid_last` and then at the start of the next training iteration, we initialize our context units to what we stored in `hid_last`.

Neuroph is also opensource and it offers many opportunities for different architectures of neural networks 8. As the title suggests our project deals with a hardware implementation of artificial neural networks, specifically a fpga implementation.

Backward Propagation

May 05, 2020 in this article, i will be using a neural network to separate a nonlinearly separable datai. A linearly inseparable outcome is the set of results, which when plotted on a 2d graph cannot be delignated by a single line. Gradient descent machinelearning neural network gradientdescent xor neural network updated aug 16, 2019.

In the vast majority of cases, an XOR gate will output true if an odd number of its inputs is true. You can also see that it’s possible to draw a single line that separates the two kinds of output, as I have done in green, and as the single output neuron in the second layer does. You can see that although there are four rows in the logic table, there are only three points on the graph.

That didn’t work well enough to present here, but if I get it working, I’ll make a new post. Let’s walk through the flow of how this works in the feedforward direction for 2 time steps.

Why Do Neurons Make Networks

The method is tested on 6 UCI pattern recognition and classification datasets. Hence, we believe the proposed approach can be generally applicable to various problems such as in pattern recognition and classification. Mar 21, 2019 the neural network model to solve the xor logic from. A model using binary decision diagrams and back propagation neural networks ali assi department of electrical engineering, united arab emirates university, uae p.

The inputs of the NOT AND gate should be negative for the 0/1 inputs. This picture should make it more clear, the values on the connections are the weights, the values in the neurons are the biases, the decision functions act as 0/1 decisions . We have 4 context units, we add/concatenate them with our 1 input unit $X_1$ , so our total input layer contains 6 units. Our hidden layer has 4 hidden units + 1 bias, so `theta2` is a 5×1 matrix. Other than these manipulations, the network is virtually identical to an ordinary feedforward network.

We have to use a sigmoid since we want our outputs to be 0 or 1. A sigmoid output followed by a threshold operation should do it.

Keep an eye on this picture, it might be easier to understand. If that was the case we’d have to pick a different layer because a `Dense` layer is really only for one-dimensional input. We’ll get to the more advanced use cases with two-dimensional input data in another blog post soon. All the inner arrays in target_data contain just a single item though. Each inner array of training_data relates to its counterpart in target_data. At least, that’s essentially what we want the neural net to learn over time.

And now let’s run all this code, which will train the neural network and calculate the error between the actual values of the XOR function and the received data after the neural network is running. The closer the resulting value is to 0 and 1, the more accurately the neural network solves the problem. Now let’s build the simplest neural network with three neurons to solve the XOR problem and train it using gradient descent.

Explanation Of Maths Behind Neural Network:

Tasks that are made possible by nns, aka deep learning. This is done since our algorithm cycles through our data. Implementation of a neural network using simulator and petri nets. The description of this and other related stuff is on my website. Solving the linearly inseparable xor problem with spiking neural.

In a typical neural network, a set of inputs is operated on by a series of nonlinear elements called neurons. We propose a biologically motivated brain-inspired single neuron perceptron with universal approximation and XOR computation properties. This computational model extends the input pattern and is based on the excitatory and inhibitory learning rules inspired from neural connections in the human brain’s nervous system. The resulting architecture of SNP can be trained by supervised excitatory and inhibitory online learning rules. The main features of proposed single layer perceptron are universal approximation property and low computational complexity.

Neural network ann chip, which can be trained to implement certain functions. Layer network xor function and the perceptron linear separability. Jun 03, 2020 artificial neural network ann is a computational model based on the biological neural networks of animal brains. The neural networks trained offline are fixed and lack the flexibility of getting trained during usage. Architecture a description of how the circuit should behave function. Once you can successfully learn xor, move on to the next section.

The rest of the sequence matches with XOR of each pair of bits along the sequence. You might have to run this code a couple of times before it works because even when using a fancy optimizer, this thing is hard to train. Unfortunately scipy’s `fmin_tnc` doesn’t seem to work as well as Matlab’s `fmincg` (I originally wrote this in Matlab and ported to Python; `fmincg` trains it alot more reliably) and I’m not sure why . Although, according to Figure 2, it seems that GDBP is better in some cases, what is very important in the results is number of learning epochs. According to Table 2, MLP needs many epochs to reach the results of SNP. It is the main feature of proposed SNP, fast learning with lower computational complexity, that makes it suitable for usage in various applications and especially in online problems.

Neural Network For Xor

It consists of finding the gradient, or the fastest descent along the surface of the function and choosing the next solution point. An iterative gradient descent finds the value of the coefficients for the parameters of the neural network to solve a specific problem. For the system to generalize over input space and to make it capable of predicting accurately for new use cases, we require to train the model with available inputs. During training, we predict the output of model for different inputs and compare the predicted output with actual output in our training set.